How might we enhance the experience of telepresence on TeleWindow with approachable technical improvements?

Background

TeleWindow is an ongoing telepresence research project being conducted under the guidance of Michael Naimark. The aim is to explore how a two-way, one-to-one communication, "telepresence window", system can be developed and how this system can assist communication in the digital age.

Utilizing these different ingredients, we aim to prototype a device that can offer features such as two-way eye contact, stereoscopy, and telepresence, all done in realtime.

In 2020, I spent six months working on the TeleWindow project to research and conduct technical experiments to address identified problems and unmet potentials of the hardware.

Previous Progress

Currently, we are using four Intel RealSense cameras in order to create a volumetric pointcloud representation of a person. Then, we correct perspective based on the head/eye positions of the user. The final imagery is rendered stereoscopically and displayed on an eye-tracked volumetric display acquired through SeeFront.

Identified Problems

Visual Artifacts

Imperfectly aligned pointclouds introduces visual artifacts on TeleWindow. To align four realtime pointclouds, we used four static transformation matrices acquired before the realtime session. The matrices are calculated from a sample reading through ICP pointcloud registration algorithm.

The assumption was that since four cameras have stable relative positions to each other on a display-mounted rig, the pre-calculated transformation should apply once and for all. In practice, however, we found that the pre-calculation failed to align all pointclouds correctly, especially for closer objects to the camera, due to perspective distortion and noise.

Imperfectly aligned pointclouds result in overlapping parts of faces.

Not quite "volumetric"

Pointcloud also falls short in representing true volumetric objects. Although it resembles a higher visual fidelity using minimal computational power, there's not much you can do with these data. Because there is no geometry associated compared to a mesh, pointcloud objects have limited potential to be "physically" represented in the virtual world (e.g., reacts to lighting).

Research

With the two known problems in mind, I conducted technical research and experimentation over six months. A lot of inspiration were drawn from existing literature regarding the specialized system for telepresence and my skills in computer graphics.

Surface Reconstruction

To eliminate unwanted artifacts, recent literatures suggest the best solution is surface reconstruction from pointclouds, instead of finetuning the alignment.

The State-of-the-art Approach

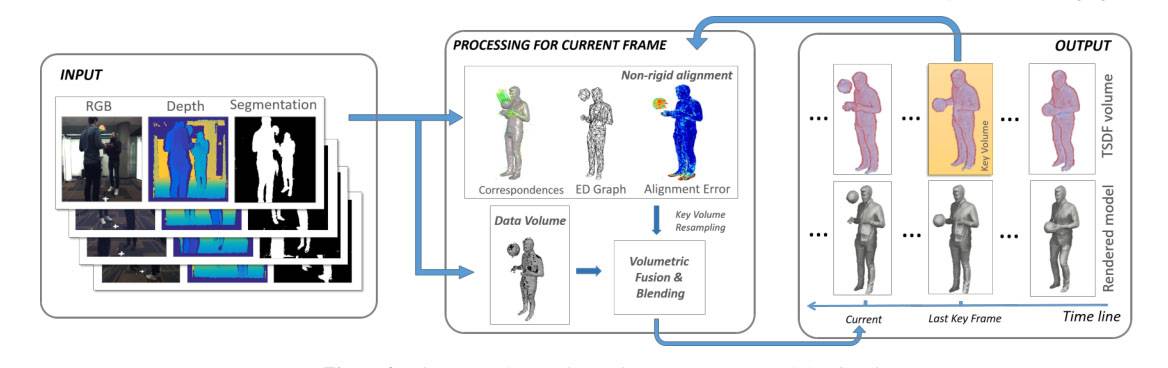

Many research in techniques to realize telepresence all have their own pipelines to achieve 3D reconstruction. Microsoft Research approached scene reconstruction in 2016 with Fusion4D system for live performance capture.

However, a similar approach would require a much more sophisticated pipeline is for high-quality reconstruction, which uses proprietary algorithms from Microsoft. In addition, our use case deviates from the one in this research, since our RGB-D cameras are positioned significantly closer to each other and the subject, introducing lots of overlapping fields of view.

Alternative Approach

To validate the idea of reconstruction, we looked for other alternative algorithms. Unfortunately, most trivial methods in current literature are not ready for realtime computation.

Marching Cubes is most widely used surface reconstruction technique, commonly found in medical scans. The algorithm can be accelerated with GPU, and previous research has proved that it could hit 30 fps on pointclouds with comparable dimension to TeleWindow.

We decided to proceed with marching cubes and click here to jump to the results.

Virtual Relighting

Perform realistic relighting on faces using volumetric information from RGB-D cameras.

Mainstream Approach

The most common use case to take advantage of the depth information contained in a portrait is to relight the face in poor lighting environments. Recent literature suggested mostly AI-based apporaches performed on static images, which does not apply to our use case (realtime & pointclouds in 3D).

Realistic Approach

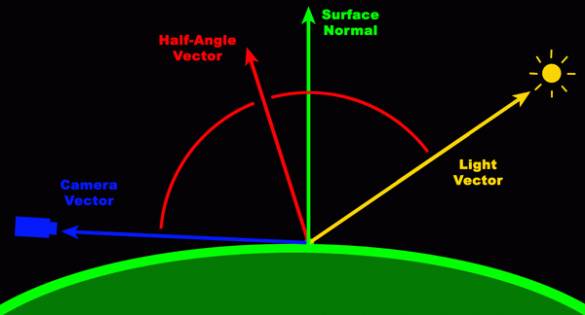

The essential information about lighting an object in 3D space is the normal vector of a vertex, which helps calculate how directional light reflects on specific points. And specifically for TeleWindow, acquiring normals for pointclouds is possible through fetching and processing depth maps right from RGB-D cameras.

I have implemented similar rendering pipelines in my past reserach on fluid rendering.

Therefore, during the later phase of the research, my goal was to implement custom shaders that generates additional information to allow pointclouds to react to lighting.

Implementation

On top of the existing Unity project for TeleWindow, I experimented with the surface reconstruction (marching cubes), and implemented a prototype for relighting pointclouds.

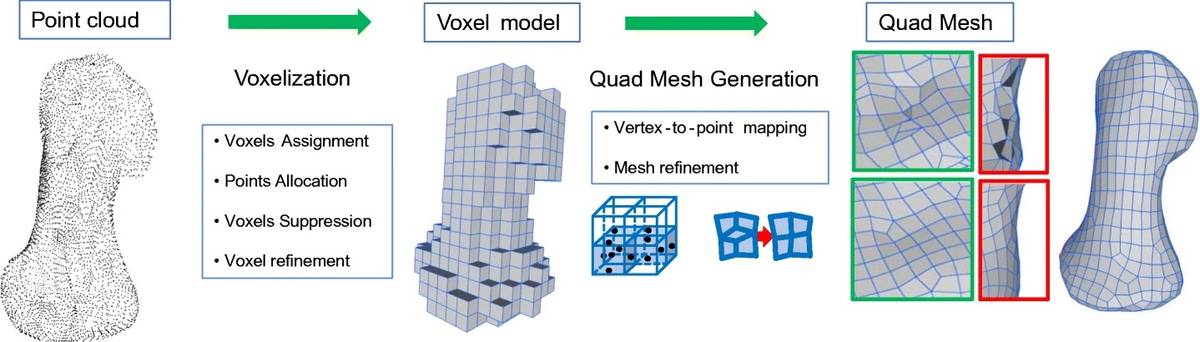

Marching Cubes

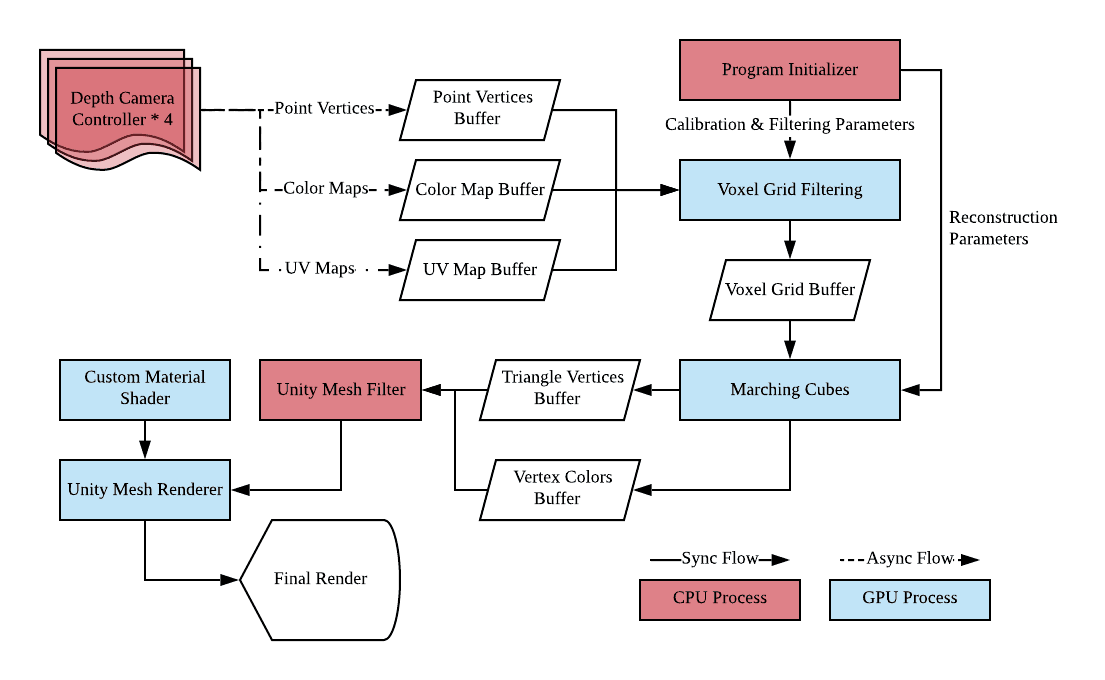

By programming multiple compute shaders in Unity, I distributed the computation of marching cubes on GPU to ensure realtime performance. Here is a diagram of the pipeline of our final implementation:

Normal-Based Relighting

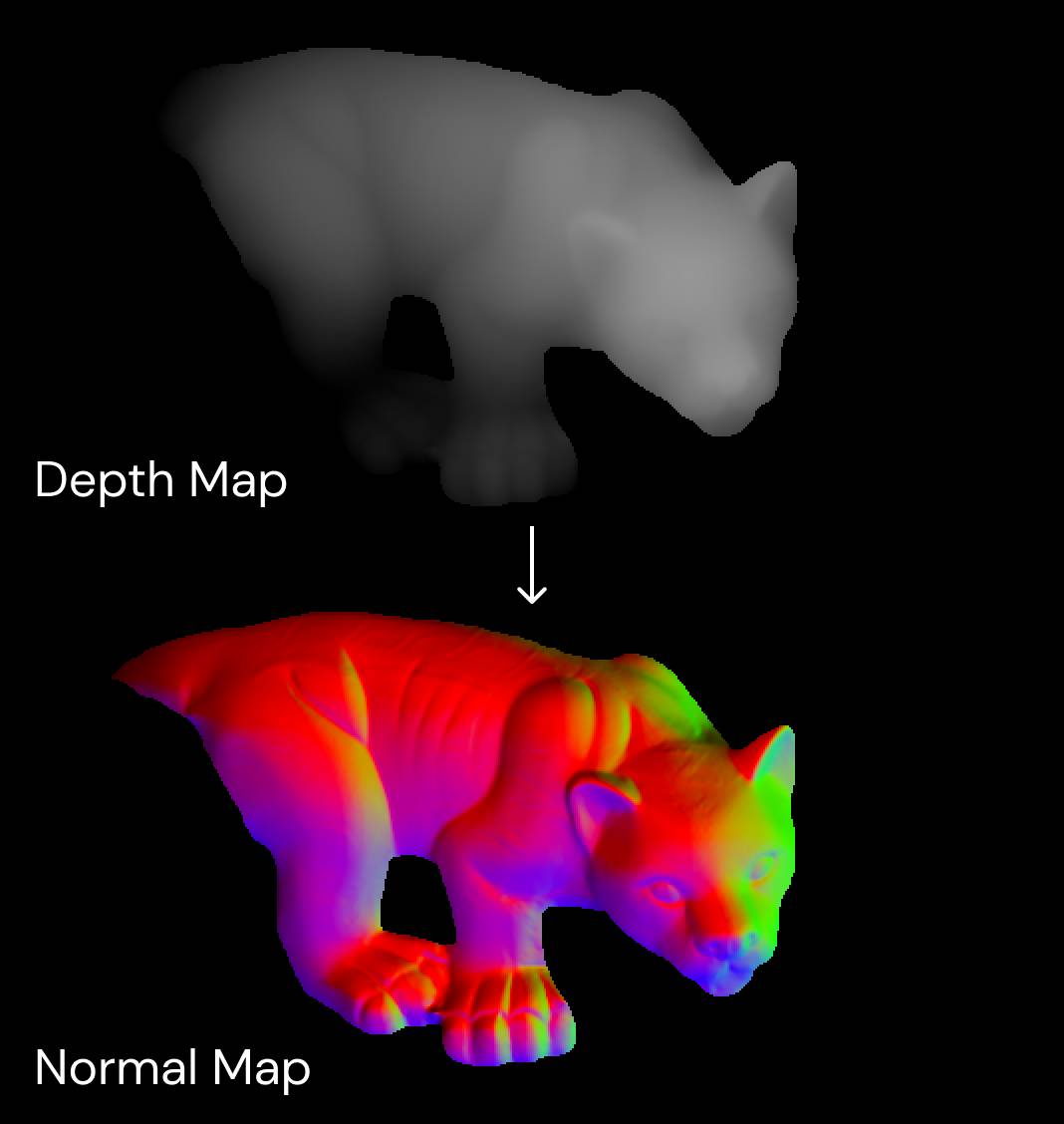

Before the depth maps from cameras is interpreted in to pointclouds, normal maps is calculated using the cross product of neighboring pixels. Then, the normal vector is assigned into every vertex in the pointcloud. By customizing the material of each vertex, the final prototype allow pointclouds to react to light sources in Unity.

Results

Surface Reconstruction

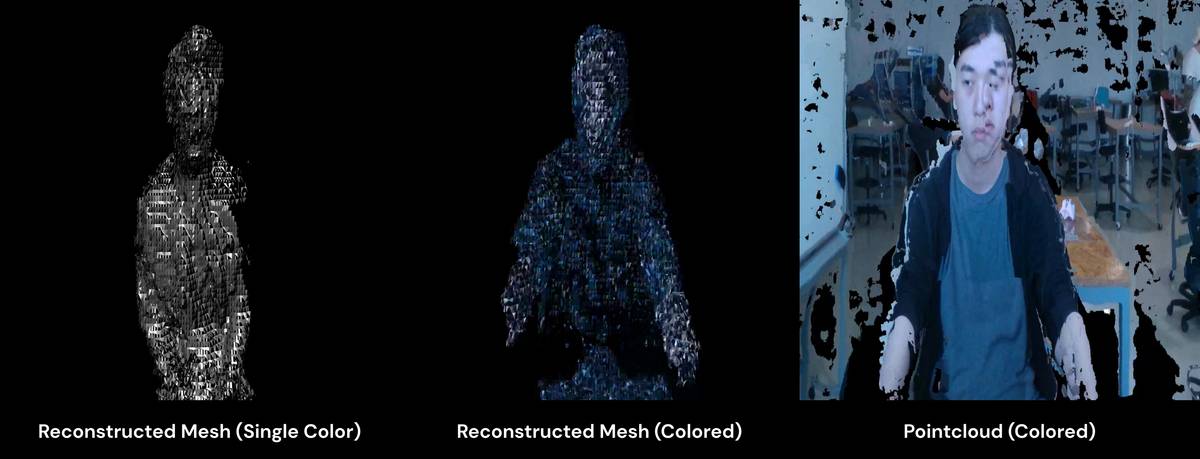

The implemented marching cubes was a relatively naive version, which did not yield the best possible results with the same algorithm. Due to the memory bottleneck introduced by voxel filtering, the experiment was stuck at fairly low resolutions (256 × 256 × 256) for the output geometry.

For this reason, the end result actually introduced more artifacts rather than eliminating existing ones. Nonetheless, the experiment helped us understand the potential and limitation of marching cube on TeleWindow.

In addition, despite the poor visual quality, our reconstructed mesh won over the original pointclouds in two aspects:

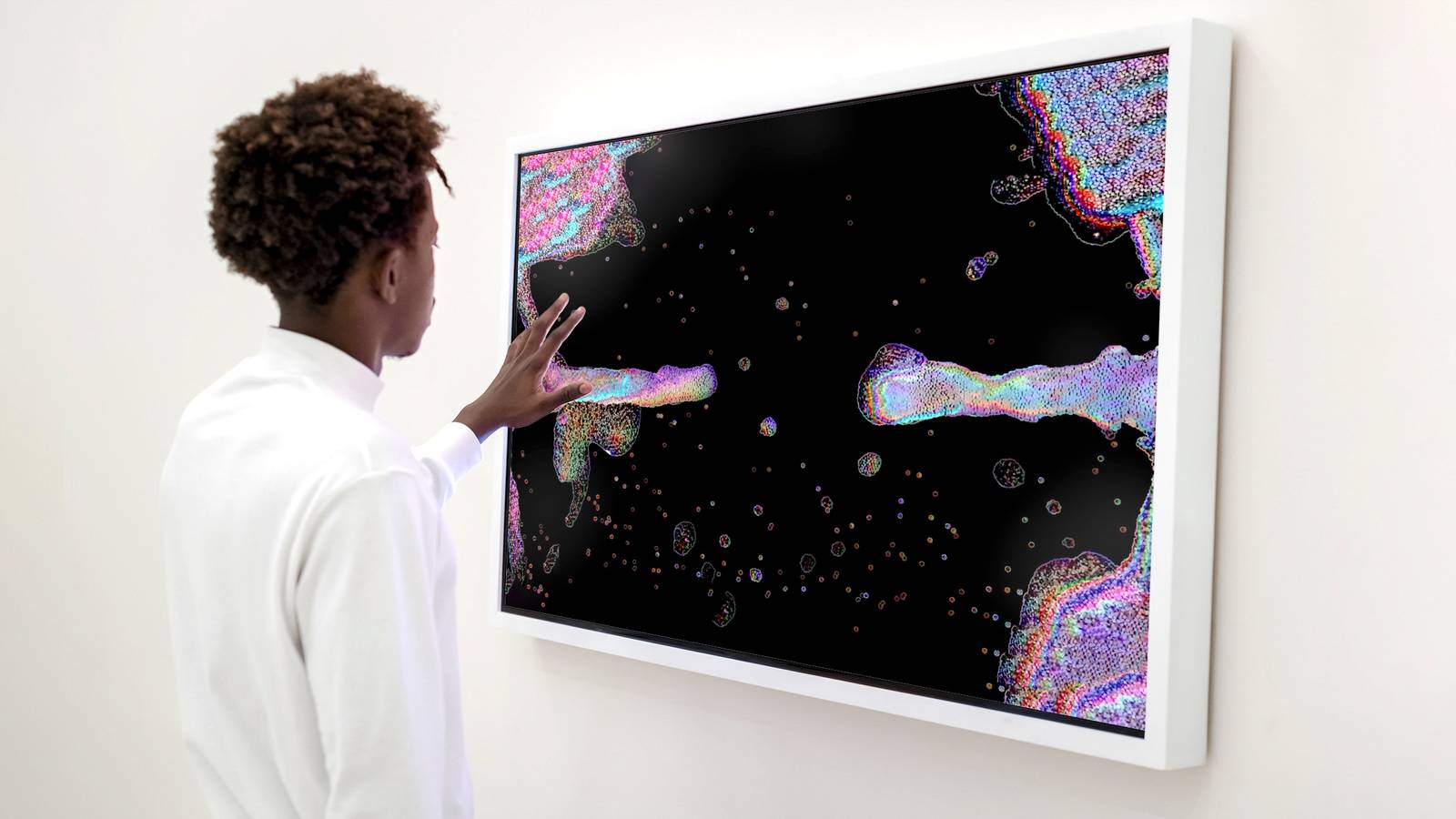

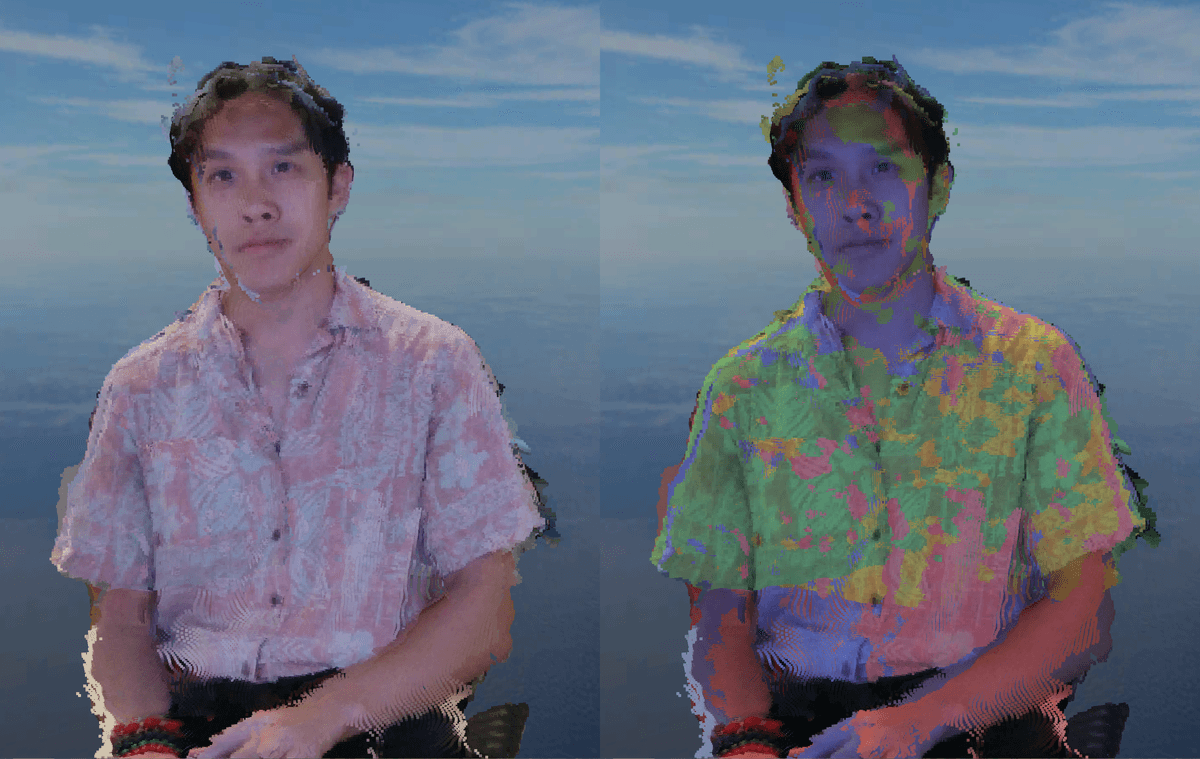

Virtual Relighting

The later relighting experiment tries to bypassing surface reconstruction with more approachable techniques. In the end, by calculating normal vectors on each point vertex, this apporach managed to add many volumetric traits to the pointcloud.

The demo shows how the pointcloud reacted to directional lighting in a Unity scene.

There are clearly visible noises in the normal map, despite my effort to mitigate them through blurring and filtering. That means it is due to the visual discrepancy between four RGB-D cameras, which would require a deep dive into the cameras' image processing.

Conclusions

Our team learned through my half-year-long research that there is probably no trivial way to eliminate the identified visual artifacts, at least not through surface reconstruction. However, there are many other potentials for pointclouds post-processing to realize useful features, such as relighting in a virtual scene.

Big tech companies have invested heavily in developing complex algorithms to perfect the visual quality, of which many are machine-learning based. However, for an academic research project that focuses on the novel experience of telepresence, the cost-effective approach might be prototyping innovative use cases that probe into users' reactions.

As for my personal takeaway, working on TeleWindow gave me a better understanding on the power of telepresence. Besides all the technical efforts in computer graphics, TeleWindow broadened my view on what makes an plausible and immersive telepresence other than traditional HMDs. The simple combination of realtime 3D capture and stereoscopic display blew me away 🤯.

Now I'm working on another project that utilizes a holographic display made by Looking Glass Factory. So please look forward to that 👀

Acknowledgement

Sincere thanks to Michael Naimark & Dave Santiano for providing so much guidance to onboard me to the project and advising me throughout the journey.