A technical experiment of real-time 3D fluid simulation and visual rendering capabilities of the TouchDesigner platform. Summer research for new media installation techniques at OUTPUT.

Background

The experiment originated from my interest in this topic and technical research on Vincent Houzé's interactive installation works. Unfortunately, there were very few tutorials about the rendering pipeline of similar kinds by the time of this project. Therefore, my research and implementation largely depended on Houzé's approach, which he partially shared on Github and the conventions in computer graphics.

Creating real-time interactive fluid visuals requires two parts - simulation and rendering.

Fluid Simulation

If to build from scratch, the simulation requires understanding the basics of fluid dynamics and its discrete implementation in GPU. The standard approach is to implement Lagrangian schemes in the GPGPU structure. The technique is commonly used for simulating liquid, smoke, fire, and fabric.

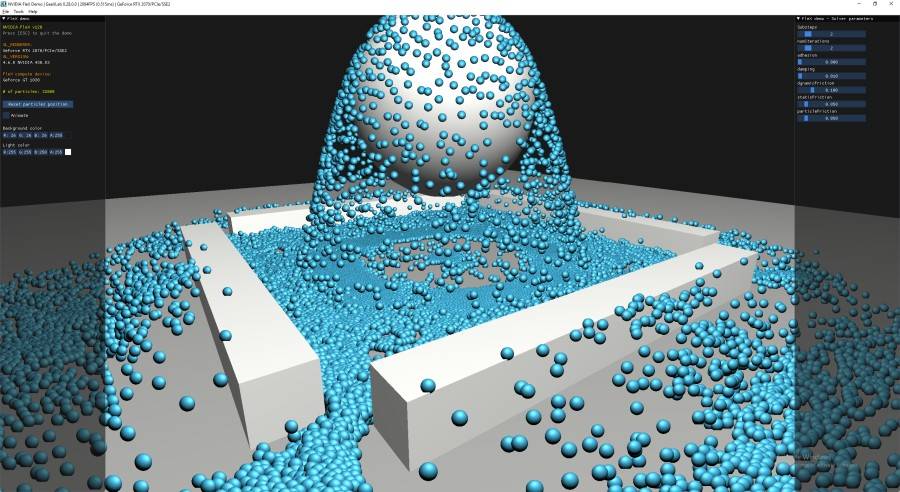

Houzé's approach is to integrate Nvidia FleX, a real-time particle solver by Nvidia, with TouchDesigner by building a C++ plugin. He released the plugin FlexChop opensource. The plugin provides an interface in TouchDesigner to specify a particle system and acquire later states of the simulation in real-time in a CHOP operator.

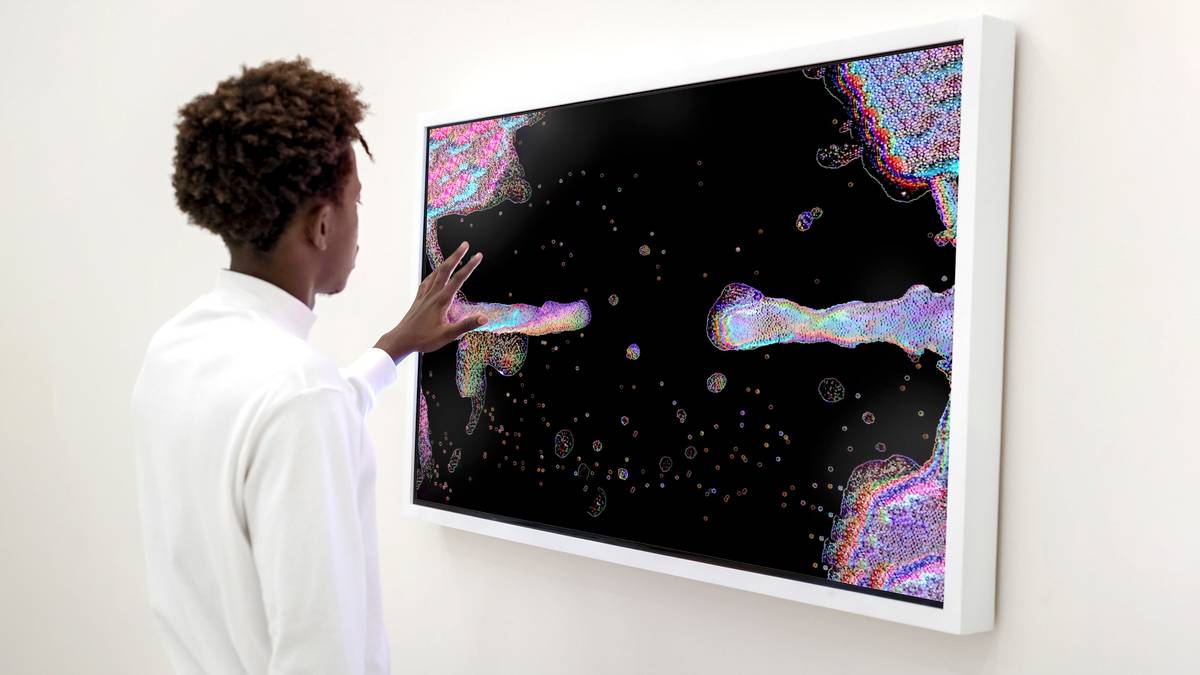

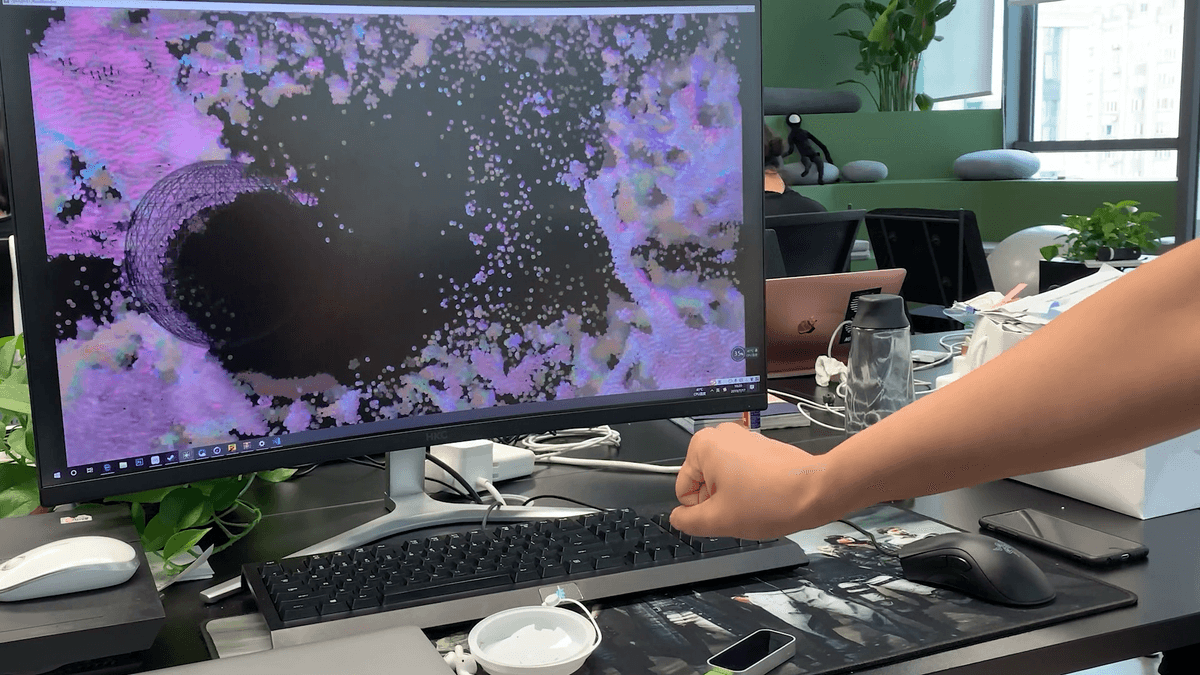

In addition, Nvidia FleX allows real-time input, such as geometries that collide with the fluid. I conveniently paired up a LeapMotion to translate hand gestures and positions into controls of the physical environment.

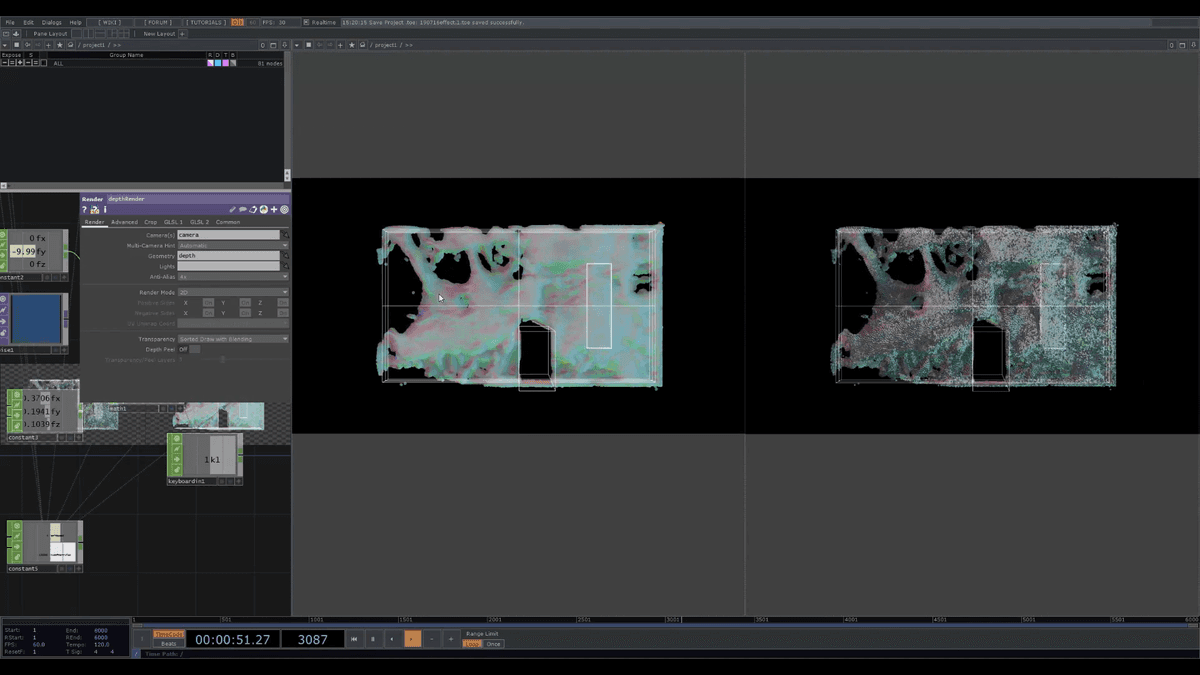

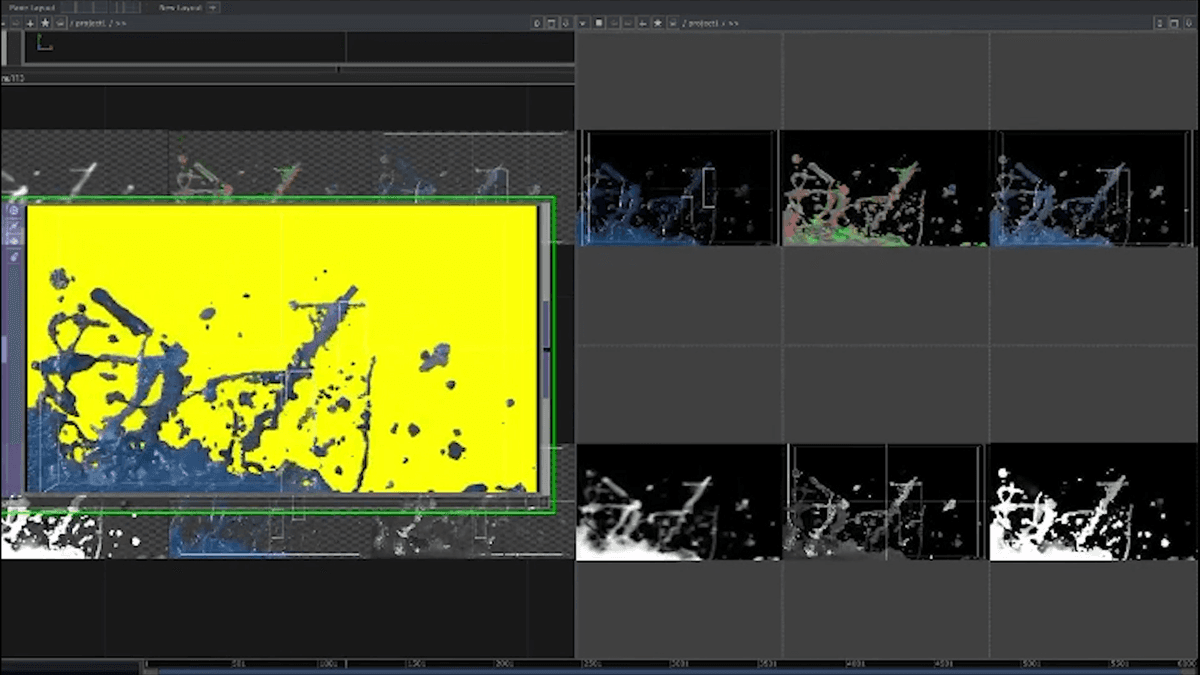

Screen-Space Rendering

To give these particles the look of fluid, there are several techniques. The traditional surface reconstruction algorithm is too computation-heavy and will not work in any real-time use case. In gaming graphics, the industry takes a screen-space approach. That is to infer the final graphics from several different renderings of the camera plane, regardless of the geometrical ground truth of the fluid.

Referencing Nvidia's talk at GDC 2010, the implementation includes several passes which render the particles as a sphere to generate depth map, normal map, and density map, to calculate the reflection and refraction. This is realized by coding custom shaders as a GLSL TOP in Touchdesigner.

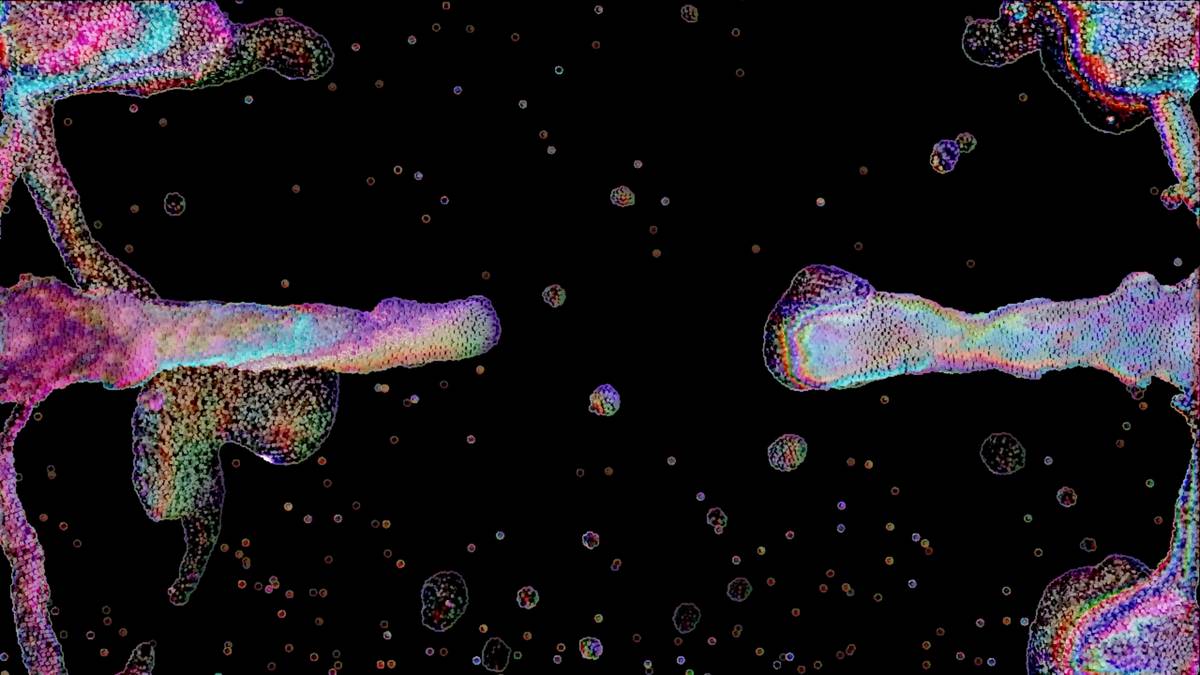

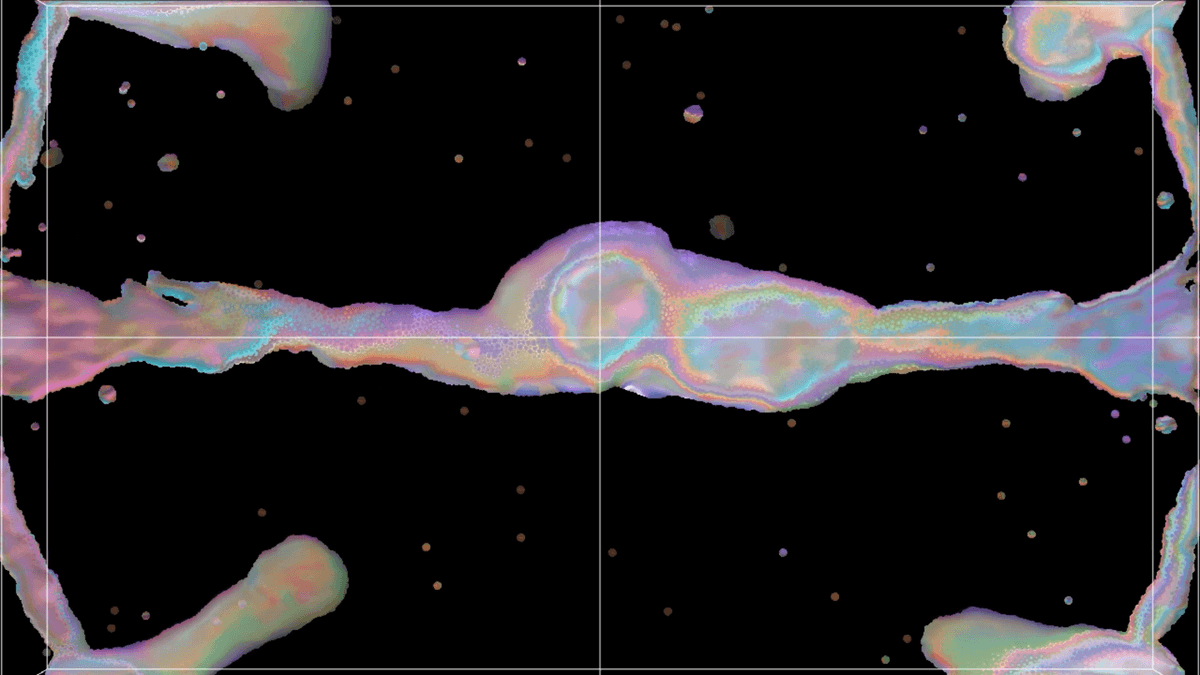

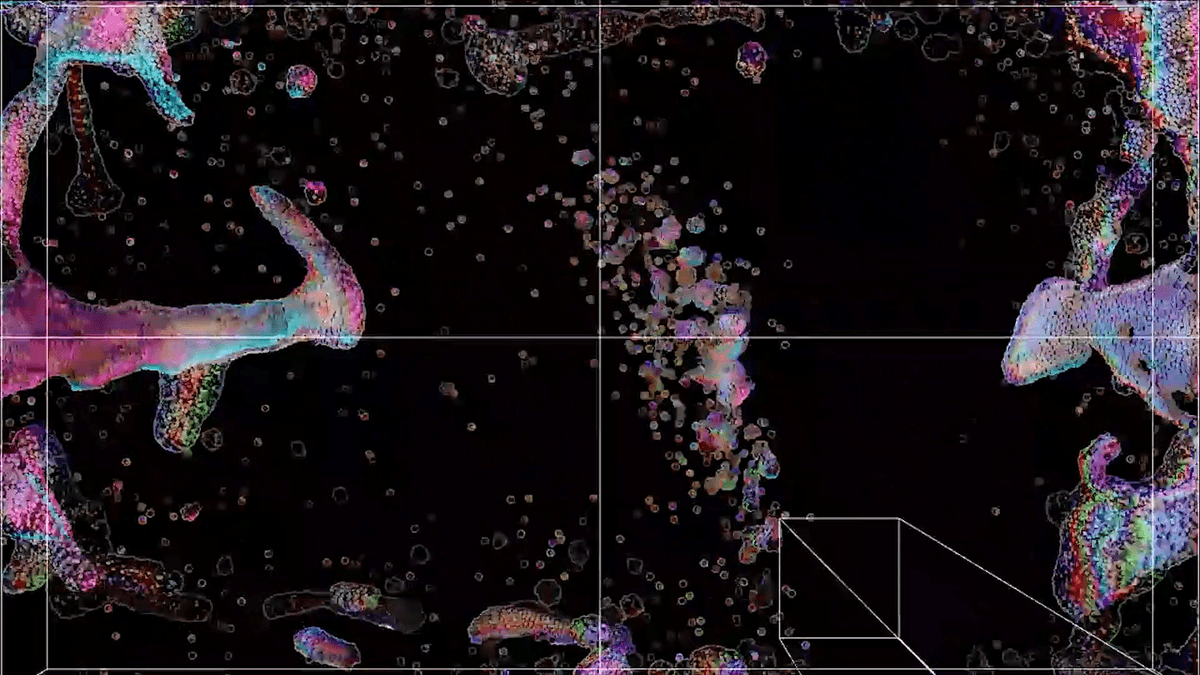

Stylized Rendering

After building a foundation of the rendering framework, I started experimenting with different aesthetics by multiplying rendering techniques on Touchdesigner.

Acknowledgement

Special thanks to OUTPUT for providing the space, hardware, and opportunity.